If you are wondering how to optimize the WordPress robots.txt file for the best SEO? Then, you have landed in the right place. In this article, we are going to explain the facts about robots.txt WordPress.

For each of your landing pages and posts on the WordPress site, a robots.txt file is automatically created. This is a core part of a website’s SEO. Because it will be used by search engines when they crawl the content of the site.

Although it’s not as easy as just adding keywords to the content. Optimizing the robots.txt file on your WordPress website is important if you want to advance SEO. To start perfecting it and raising your search ranking, we have created this guide for you.

What is a robots.txt File?

A robots.txt file is a set of commands for the Google crawler bot. Usually, this file is included in the source files on most websites. The main purpose of these files is to manage the activity of the Google crawlers. You r will give commands to the search engine as to which pages to crawl and which to ignore. Additionally how to index the pages.

Let’s try to make it easier to understand, we have used words like bots, Google crawlers, source files, and indexing. To know about these things, you should learn the basic steps. Google follows to select a page or website to rank higher in the search engine results.

Initially, when you give commands through the robots.txt file, the search engine sends Google crawler bots. It navigates through the internet to find new websites and pages. The process is called crawling. After finding your website, the search engine starts indexing the pages of the website in a functional data structure method.

Once you are done with page indexing then it’s all about ranking the page on SERPs. However, it entirely depends on how well your webpage is optimized. The more it’s optimized the better it will perform in search engine results.

What Does a Robots.txt File Look Like?

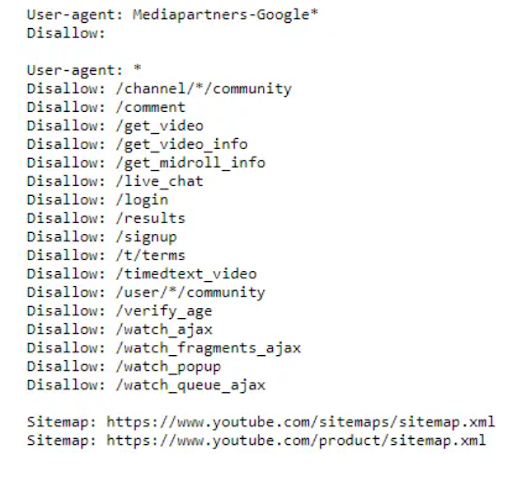

The format of the robots.txt file is very simple. If you add /robots.txt to the end of the domain name of any website, you can view its robot.txt file. Typically, the first line identifies the user agent. The name of the search bot you’re trying to contact is the user agent. For example, Google, Bingbot, Slurp bot, etc. You can start the line by using an asterisk * to command the bots.

The next line defines search engines Allow or Disallow commands. They can understand which pages you want them to index and which ones you don’t. Let’s see an example to understand better.

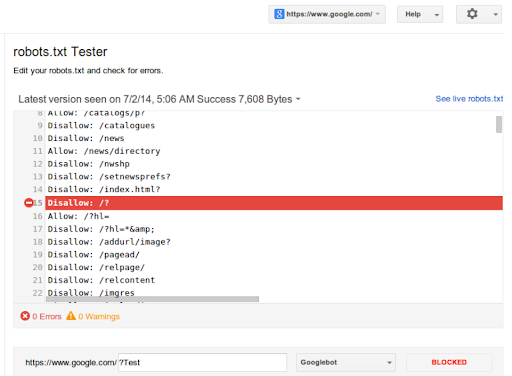

As you can see from the image, we have put a set of commands in the robots.txt file. Here, we have used the user-agent as Googlebot to crawl the pages. In the next line, we have added the Disallow command. Now the search engine can understand what pages not to crawl.

Moreover, use the sitemap in your robots.txt file. It can make it easy for search engines to find all the pages of your site.

Why Do You Need a Robots.txt file on your WordPress Site?

If you own a WordPress website, by default it will generate the robots.txt file. Let’s explore some of the reasons why it is so important for your WordPress site.

Optimize the Crawl Quota

Crawl quota means there is a selected number of pages for the search engines to crawl your website. If you don’t have the robots.txt file on your site, the search engine bots may waste your quota by crawling randomly. You might not want to appear first on SERP (Search Engine Results Page). So, the robots.txt file will help you to optimize your crawl quota for your website.

For example, if you sell any products or services through the WordPress website. Ideally, you would like to show the best sale conversation page first on SERP. To do that you need to instruct the robots.txt file to crawl the specific page first.

Rank the important Landing pages

Make sure that the landing pages you want to show up first in SERPs. They are simple and quick for crawler bots to find by optimizing the robots.txt file. You can also ensure the blog articles appear in SERPs by dividing your site index into “pages” and “posts”. Rather than simply your standard landing pages.

For instance, you may utilize sitemaps in your robots.txt file to make sure that your blog posts are showing up on SERPs. If your website has a lot of pages and your customer data that indicates your blog posts are producing a lot of sales.

Custom block styles can be especially useful if you want to create a consistent look and feel throughout your website or if you want to create unique designs for specific blocks. With Custom Block Styles, you can take full control over the appearance.

Enhance the SEO Quality of your WordPress Site

By optimizing the robots.txt file, your WordPress website can rank higher in the SERPs. You can have control of the robots.txt file. This will help your website to improve its SEO quality.

However, this is not the only way to ensure the best SEO quality for the website. SEO-friendly content matters a lot to increase the rank in search engines. However, editing your robots.txt file is something you can handle effortlessly on your own.

How to create a WordPress robots.txt file

Since we know what a robots.txt file is, how it looks, and why it’s important for the WordPress website. So, now we can look forward to how to create the WordPress robots. txt file. There could be many ways but we are going to show you the two easiest ways of creating the files.

Create a WordPress robots.txt file Manually

Most of the time the robots.txt file is created by default. But in case, if it’s not created then you need to create it by yourself. Let’s create a WordPress robots.txt file manually. First, you have to create a text file on your computer using a text editor like a notepad. After that, add these instructions and save the document as robots.txt.

If the file is already created and you want to edit the file. For that, you need to log in to your hosting account using an FTP client. After getting logged in to the WordPress hosting account, you will be able to see the robots.txt file. Which is situated in the WordPress website’s root folder. Once you find the file, to edit it right-click on the robots.txt file and choose the edit option.

When you are done with creating or editing the robots.txt file, upload it using an FTP client. Hit the File Upload option in the root folder of your website.

Finally, To check whether robots.txt in the WordPress file has been uploaded successfully or not. Put “/robots.txt” after the link to your website and verify it.

Create a robots.txt file with WordPress Plugin

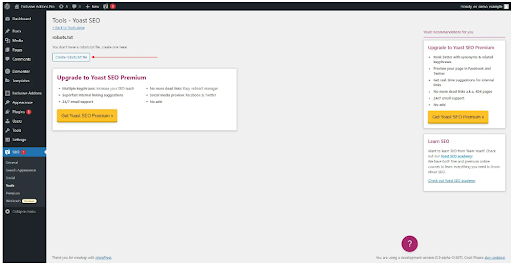

You can also create a robots.txt file by using WordPress plugins. Many plugins may help you to create the file. We are going to demonstrate the Yoast SEO plugin to create a WordPress robots.txt file. Let’s get started.

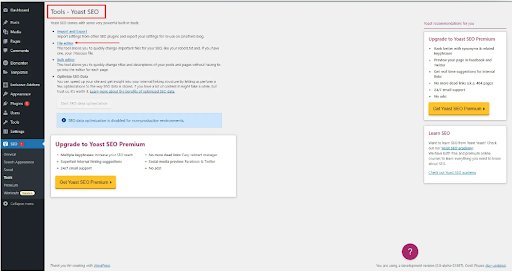

Firstly, install the Yoast SEO plugin. Go to Yoast SEO > Tools and Select the File Editor.

Now, you will be able to see the “Create robots.txt File” option. Hit on it and create the file.

There will be some commands written by default. Like, User-Agent, Allow, and Disallow. If you want to add some other commands to the robots.txt file, you can easily add them. You will have control over the robots.txt file.

How to test WordPress robots.txt File?

Once you have created or edited the file. Now it’s necessary to test the file to check that you haven’t made any mistakes. If there are any mistakes then the entire website can be eliminated from the SERPs.

You can test your file using the free robots.txt testing tool provided by Google Webmaster. You only need to add the URL of the homepage to test it. Any lines of the faulty robots.txt file will display “syntax warning” and “logic error” in the file’s interface.

You can enter any particular page from your website and select a user agent to run the test. And verify whether the page is accepted or not. You can also edit the robots.txt file by using this testing tool and run the test if it’s required.

Note that, to ensure the changes you must copy and paste the modified information into your robots.txt editor. And save it there.

How to Optimize WordPress Robots.txt File for SEO

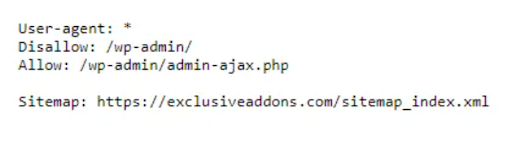

The easiest way to optimize the file for SEO is to select the pages you might want to disallow. Such as WordPress pages like /wp-admin/, /wp-content/plugins/, /readme.html, and /trackback/.

Let’s take a glance at the possible ways that can help you to optimize the robots.txt file.

Adding Sitemaps to the Robots.txt File

Usually, WordPress automatically generates standard sitemaps on its own when you create any blog or website. you can find this at example.wordpress.com/sitemap.xml. If you want to customize sitemaps and add any commands to them then it’s better to use the file.

Try Less is More Approach

While updating or optimizing the WordPress robots.txt file, It’s better to try the less is more approach. Because if you disallow it, the crawler bot will not follow the less important pages on your website. Instead, it will search for the other pages which you may allow. This is how you will be able to optimize the file for SEO. It will create less traffic and find the key pages instead. This can even help to increase the rank of the website in SERPs.

Conclusion

In this guide, we tried to cover the possible facts of optimizing the WordPress robots.txt file. Starting from the basics of creating the robots.txt file to test the file and how to optimize it.

Now, at least you will be able to disallow the pages from search engines to crawl and be able to optimize the file by adding sitemaps and testing whether it’s successfully optimized or not.